Each year, about 1.35 million people are killed in crashes on the world’s roads, and as many as 50 million others are seriously injured, according to the World Health Organization. In the United States, fatalities rose drastically during the pandemic, leading to the largest six-month spike ever recorded, according to estimates from the U.S. Department of Transportation. Speeding, distraction, impaired driving and not wearing a seatbelt were top causes.

Artificial intelligence is already being used to enhance driving safety: cellphone apps that monitor behavior behind the wheel and reward safe drivers with perks and connected vehicles that communicate with each other and with road infrastructure.

Acusensus, based in Australia, is among the companies that employ artificial intelligence to address road safety. Its cameras — “intelligent eyes,” as Acusensus calls them — use high-resolution imaging in conjunction with machine learning to identify dangerous driving behaviors that are often difficult to detect and enforce.

“We’ve got technology that can save lives,”

Mark Etzbach, the company’s vice president of sales for North America.

In regions where precise and relevant data exists, the report noted, “A.I. can identify dangerous locations proactively before crashes happen.” In Bellevue, Wash., a recent assessment used advanced A.I algorithms and video analytics at 40 intersections

Introduction to artificial intelligence

Artificial Intelligence (AI) is a branch of computer science that aims to create intelligent machines capable of performing tasks that typically require human intelligence. These tasks include learning, reasoning, problem-solving, perception, language understanding, and decision-making. The overarching goal of AI is to develop systems that can emulate or simulate human intelligence, enabling them to adapt, learn from experience, and perform tasks autonomously.

The field of AI encompasses a broad spectrum of approaches and techniques, ranging from rule-based systems and symbolic reasoning to more recent advancements in machine learning and neural networks.

Artificial Intelligence has seen rapid advancements in recent years, with applications in various industries such as healthcare, finance, transportation, and entertainment. As AI continues to evolve, it holds the potential to bring about transformative changes in how we live, work, and interact with technology.

Let’s go through the role of artificial intelligence in each of the following:

- In-Vehicle:

Artificial Intelligence (AI) in vehicles is designed to prevent accidents by providing an additional layer of safety. AI systems can detect and respond to potential hazards on the road in real time. Some of the ways AI is used to prevent accidents in vehicles are as follows:

- Automatic Emergency Braking(AEB):

AEB automatically applies the brakes in case of a sudden stop by a vehicle in front of it. It uses a combination of sensors, cameras, and AI algorithms to detect potential collisions and automatically applies the brakes if necessary. AEB systems mostly use radar and vision sensors to identify potential collision partners ahead of the ego vehicle. These systems often require multiple sensors to obtain accurate, reliable, and robust detections while minimizing false positives. To combine the data from various sensors, multiple-sensor AEB systems use sensor fusion technology.

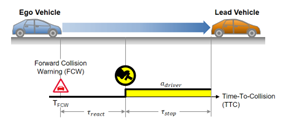

AEB works in conjunction with the Forward Collision Warning (FCW) system. FCW alerts the driver by sound or a visible sign on the dashboard. Generally, FCW activates before AEB kicks in. First, the FCW warns the driver about the obstacle ahead, and if the driver fails to take appropriate action, the automatic braking system intervenes.

Glance through the points below to understand the automatic emergency braking system’s working mechanism.

- The sensors and/or cameras constantly monitor the distance between your car and the obstacle (moving car, pedestrian, etc.) ahead.

- If the distance reduces rapidly, for instance, if the vehicle in front brakes suddenly, the system immediately triggers a warning.

- The driver receives an alert message via an audio or visual medium.

- If you are too late to react, the AEB comes into action and automatically applies the brakes.

- The ECU (Electronic Control Unit) monitors your input and can detect when you are off the throttle and applies the brakes manually. So, AEB will not kick in unnecessarily.

- The Anti-Lock Braking System (ABS) helps AEB stop/slow down the vehicle efficiently.

- The entry-level AEB systems work only at slow speeds. They can be helpful when you drive in the city.

- The more sophisticated automatic braking systems work across a wider speed range. Hence, they may avoid or mitigate the intensity of a high-speed collision.

- The most advanced AEB systems can also detect stationary objects, moving pedestrians, cyclists, and cars

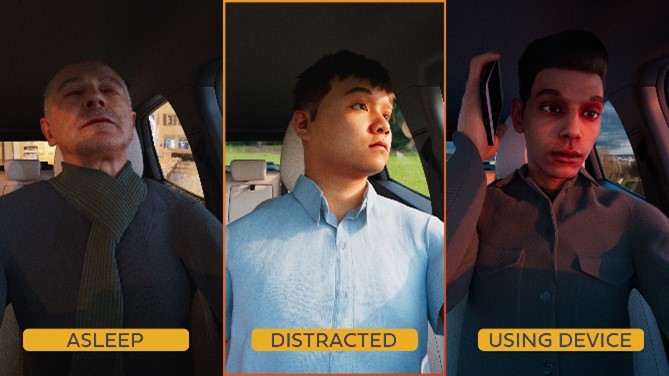

2.Driver Monitoring:

Driver Monitoring using AIoT refers to the use of artificial intelligence and the Internet of Things (IoT) to monitor and analyze driving behavior. AIoT delivers real-time information about driving patterns, performance, and safety, making it possible to improve road safety and reduce accidents. AI algorithms are used to analyze data from sensors, cameras, and other devices installed in vehicles, enabling the system to quickly identify behaviors of interest such as speed, acceleration, deceleration, and more. This information is then transmitted over the internet, allowing for instant analysis and actionable responses. By continuously monitoring driving behavior, AIoT-based systems can help drivers stay alert and focused, reduce fatigue, and avoid dangerous driving behaviors.

Methodology for Developing a Driver Monitoring System:

The development process involves importing required libraries, visualizing the dataset, creating train and validation data, implementing data augmentation, and utilizing transfer learning for model creation and training.

a.Dataset Selection for Driver Monitoring System Prototype:

The Small Home Objects (SHO) image dataset on Kaggle, comprising 1,312 high-resolution images of 10 small household objects, is used for computer vision and machine learning research in object recognition and classification tasks.

The dataset’s small size and relatively simple objects make it valuable for training and testing models, particularly for beginners and those interested in straightforward classification problems.

b.Implementation of Driver Monitoring System Prototype:

The implementation involves environment setup, data visualization, train-test split, data augmentation, and hands-on with code for the project in a Kaggle notebook.

c. Training Data and Data Visualization:

discuss the validation data directory, target size, batch size, and label type for training. It starts with importing libraries, performing data visualization, and a train-test split.

e. Model Training and Plotting:

A function is created to plot the training history of the model, showing validation accuracy and loss over epochs. The new model is defined with additional layers on a pre-trained VGG19 model and trained for five epochs with SGD optimizer and categorical cross-entropy loss.

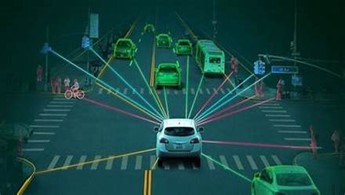

3. Traffic Management and Navigation:

Some of the proven benefits of AIoT-enabled smart traffic solutions include:

- Decreased vehicle emissions

- Accurate traffic counts and real-time recording

- Reduced waiting times at traffic lights and on freeways

- Efficient reactions to changing traffic patterns and road conditions

- Decreases accidents with accounts and training to identify human error

- Detection of anomalies in real-time, balancing traffic capacity and demand Safer roads and civilians in urban areas

SYSTEM IMPLEMENTATION

Here’s a system implementation for Traffic Management and Navigation using AIoT:

Components:

- Edge Devices:

Traffic sensors (cameras, radars, loop detectors)

Traffic lights

Roadside units (RSUs)

Vehicles with onboard sensors and communication modules.

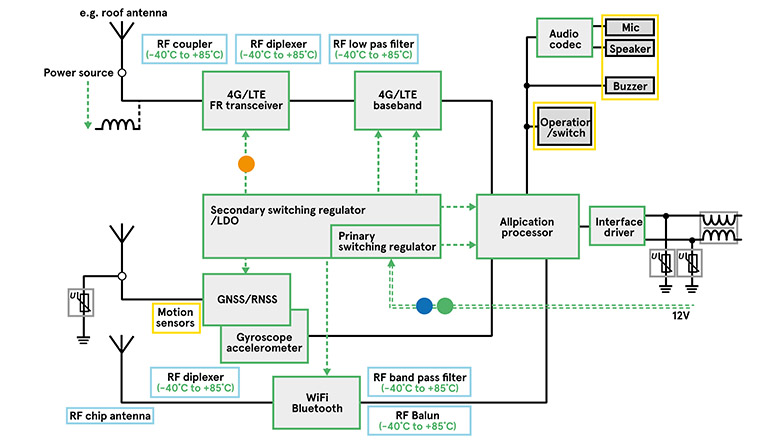

- Network Connectivity:

Cellular networks (4G/5G)

Dedicated short-range communications (DSRC)

Wi-Fi

V2X (Vehicle-to-Everything) technologies

- Cloud Platform:

High-performance servers

Data storage and management tools

AI/ML algorithms for data analysis and prediction

- User Applications:

Traffic management systems for city operators

Navigation apps for drivers

Public information displays

- User Applications:

Traffic management systems for city operators

Navigation apps for drivers

Public information displays

Implementation Steps:

- Data Collection:

Edge devices collect real-time traffic data (vehicle speed, volume, density, incidents).

Data is transmitted to the cloud platform via network connectivity.

- Data Processing and Analysis:

Cloud platform processes and analyzes data using AI/ML algorithms.

Traffic patterns, congestion levels, and potential issues are identified.

- Decision-Making:

AI-powered systems make decisions to optimize traffic flow, reduce congestion, and improve safety.

Adaptive traffic signal control, dynamic lane management, and incident response strategies are implemented.

- Action:

Control signals are sent back to edge devices (traffic lights, RSUs) to implement actions.

Real-time navigation guidance is provided to drivers via apps or connected vehicles.

AIoT Enhancements:

- On-Device AI: Edge devices can perform some AI processing locally, reducing latency and network load.

- Federated Learning: Edge devices can collaboratively train AI models without sharing sensitive data.

- Reinforcement Learning: AI systems can learn and adapt to changing traffic patterns over time.

- Explainable AI: Understanding the reasoning behind AI decisions can improve trust and acceptance.

- Bike

Smart Helmets: AI-powered helmets can provide real-time information about the surroundings, helping bikers make informed decisions and avoid accidents. Smart helmets are revolutionizing personal protection and rider experience in various disciplines, from motorcycles and bicycles to construction and sports. These helmets go beyond their traditional role of safety by integrating advanced technology, offering features that enhance comfort, communication, and even entertainment.

increases biker safety by removing any physical connections between helmet and the smart phone, which increase the biker flexibility. The Helmet permit detection for the trauma degree according to predefined threshold instantaneously. Therefore, system request urgent help or the biker still safe. System can used as helmet owner tracker, which useful in some circumstances e.g., industrial, mining, hiking, riding.

The proposed system (i.e. smart helmet) contains hardware and software sections: To launch this helmet (Android, Firebase, and Arduino) tools were utilized.

At the hardware section the needed component:

− Arduino Board for monitor the piezoelectric sensors status in addition transfer its value via Wi-Fi or Bluetooth.

− Sensor utilized for trauma (or Knock) intensity.

− Linking method between the android application and the helmet.

− Suitable Helmet to fit all the components into the helmet.

− Suitable power supply (e.g. battery cell) to start up the proposed system.

− Compatible charging Board utilizing to charge the battery when required.

The ATtiny85 represents the microcontroller utilized in this helmet due to its size and sufficient capabilities for the system. USB Development Board ATtiny8 will receive signals from knock Sensor (i.e., Piezoelectric Sensor) then send these reading to Arduino application via Bluetooth module (HC-05) for accident detection purposes.

- Elderly Fall Prevention:

The use of IoT devices and AI technologies to create intelligent and autonomous system is very useful in elderly fall prevention, AIoT can play a vital role by providing assistance to seniors in their daily activities, monitoring their health and mobility, and alerting family members or caregivers in case of an emergency.

- Wearable Devices:

AI-powered wearable devices can monitor the movements and behavior of the elderly. In case of a fall, these devices can automatically alert emergency services or caregivers.

airbag jackets: A wearable airbag used for protection in case of fall which is one of the major causes of injuries for older people. Moreover, the device automatically generates an emergency call to defined phone numbers (such as relatives). The algorithm detects the fall and activates the airbag technology to protect the most vulnerable areas of the body: –

- Hips: More than 95% of hip fractures are caused by falling, usually sideways

- Back & Shoulders: Every year, around the world, between 250,000 and 500,000 people suffer a spinal cord injury (SCI). The majority are due to preventable causes such as falls, road traffic crashes, or violence.

- Head: over 2.100.000 patients are hospitalized every year because of a fall injury, most often because of a head injury or hip fracture.

The automatic e-call is generated to one or more people that are warned about the fall, and the GPS locates the person in case of need.

- Airbag:

1. motorcycle airbags:

Motorcycle Airbags Powered by AIoT: Enhancing Rider Safety with Intelligence

Motorcycle airbags are already revolutionizing rider safety by offering additional protection beyond traditional helmets and gear. Coupling them with AIoT (Artificial Intelligence of Things) takes things a step further, introducing intelligent features that can significantly enhance their effectiveness and overall rider experience.

How AIoT Elevates Motorcycle Airbag Systems:

1. Predictive Deployment:

– Sensors integrated into the motorcycle and rider’s gear (jacket, pants) can gather real-time data on various parameters like speed, acceleration, lean angle, and braking force.

-AI algorithms analyze the data in real-time, predicting imminent crashes with high accuracy.

-The airbag system deploys proactively before impact, maximizing protection for the rider’s vital organs.

2. Dynamic Inflation Control:

-AI can determine the severity of the potential impact and adjust the airbag inflation accordingly.

-For minor collisions, a partial inflation might suffice, minimizing discomfort and unnecessary deployments.

-In severe cases, full inflation with precise pressure control ensures optimal protection.

3. Post-Crash Support:

-Sensors can detect if the rider remains unconscious after a crash.

-AI can trigger emergency calls automatically, providing critical assistance and reducing response times.

-The system can also share crash details and location with other connected riders and emergency personnel, coordinating help efficiently.

Current state of Motorcycle Airbags:

Mechanical activation: Traditional airbags rely on tether systems or sensors triggered by impacts to deploy.

Limited coverage: Protection is typically focused on the chest and upper body.

Reactive rather than predictive: They react to accidents after they occur.

AIoT integration brings about transformative changes:

Enhanced Safety:

-Smart sensors: Accelerometers, gyroscopes, and other sensors constantly monitor the motorcycle’s movement and rider behavior.

-AI-powered algorithms: Analyze sensor data in real-time, predicting potential crash scenarios and activating the airbag just before impact, offering a proactive approach.

-Improved airbag design: Targeted deployment zones based on the predicted impact location and type can provide more comprehensive protection.

Data Insights and Analytics:

-Crash data collection: Accident details like impact forces, direction, and motorcycle behavior are recorded.

-Rider behavior analysis: Insights into speed, braking patterns, and riding style can help riders improve their skills and awareness.

-Accident prevention tools: Predictive safety warnings based on real-time data can alert riders to potential hazards and encourage safer riding practices.

- Camera View:

AIoT can provide real-time monitoring and alert systems that can detect anomalies or abnormalities in camera feeds, such as sudden movements, unusual activity or even changes in lighting conditions. This technology can alert operators to potential accidents or hazards, allowing them to take appropriate action before an incident occurs.

1) 360-Degree Vision: AI-enhanced camera systems can provide a comprehensive view of the surroundings, eliminating blind spots for drivers.

This system uses a high resolution machine vision sensor and custom optics to provide 360 degree inspection of a device using only one camera. The part is illuminated using a backlight and the clear conveyor belt is servo controlled.

- Hardware Requirements:

This may include cameras for 360-degree vision, sensors, microcontrollers or single-board computers (like Raspberry Pi), and any additional hardware needed for connectivity.

- Camera Setup:

Install and configure 360-degree cameras to capture the entire surroundings. Ensure that the cameras are positioned strategically to cover blind spots and critical areas.

- Connectivity:

Implement an IoT (Internet of Things) solution for connectivity. This may involve choosing a communication protocol (e.g., MQTT, CoAP) and setting up a network infrastructure to transmit data from the cameras to a central server.

- Edge Computing:

Integrate edge computing capabilities to process data locally on the cameras or edge devices. This helps in reducing latency and bandwidth usage by performing initial data analysis at the source.

- AI Models:

Develop or integrate pre-trained AI models for object detection, recognition, and tracking. This is crucial for identifying potential hazards such as pedestrians, vehicles, or obstacles.

- Data Processing and Analytics:

Implement algorithms for processing the data collected by the cameras. Analyze the information to detect anomalies or potential accidents. This could involve real-time data analysis or periodic data processing.

- Cloud Integration:

Connect your system to a cloud platform for centralized monitoring, analytics, and storage. This allows for remote monitoring and management of the entire system.

- Alerting System:

Set up an alerting system to notify relevant parties in case of potential accidents. This could include visual alerts, audio alarms, or notifications sent to mobile devices.

- User Interface:

Develop a user interface for monitoring the system. This can be a web-based dashboard or a mobile application that provides real-time information about the surroundings and alerts.

- Testing:

Thoroughly test your system in different scenarios to ensure its reliability and accuracy. Consider simulating various accident scenarios to validate the effectiveness of your preventive measures.

- Deployment:

Deploy the system in the target environment and continuously monitor its performance. Make any necessary adjustments based on real-world feedback and data.

2.Smart Surveillance:

AI enhances surveillance systems by providing intelligent analytics. This includes tracking objects, identifying patterns, and generating alerts for unusual activities. Smart surveillance systems equipped with AI can reduce false alarms and improve response times.

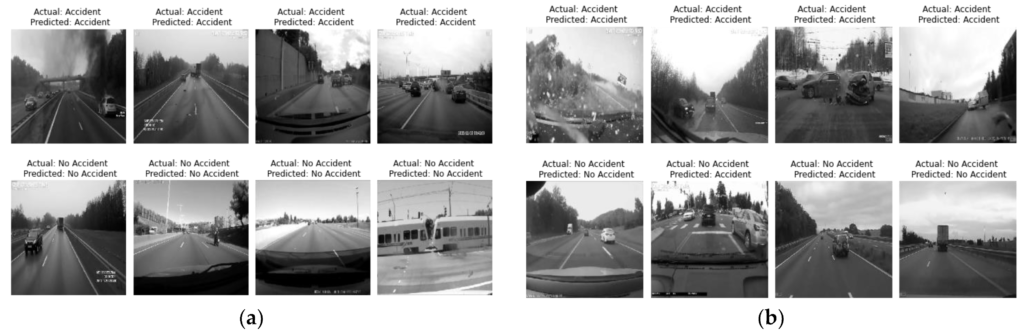

a computer vision-based accident detection and rescue system uses the pre-trained Yolo v3 model for accident identification. The Yolo model is trained in a different situation to detect accidents like rainy, foggy, low-visibility, etc.

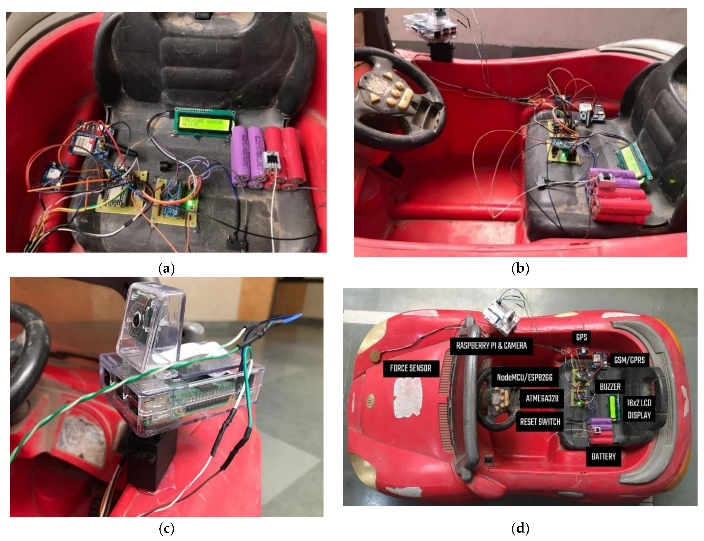

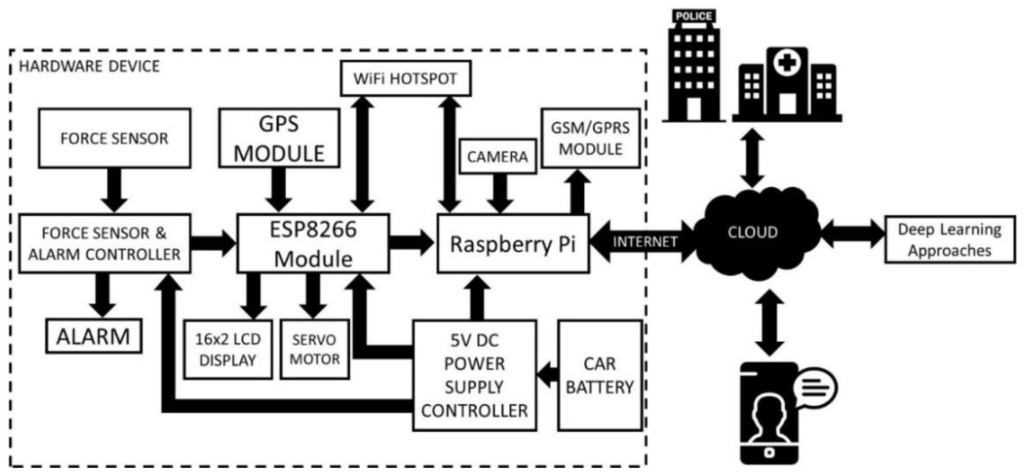

The accident detection and rescue system is an integrated accident detection and rescue system that uses an on-board unit (OBU) to identify and notify about vehicle accidents. All accident-related information like speed, type of vehicle involved in an accident, airbag status, etc., are gathered through various sensors and sent to the cloud. Then, machine learning approaches are used to predict the accident. A Wi-Fi web camera is pre-installed to the highway, which continuously monitors the vehicle passing through the road and sends it to the cloud. At the cloud, a video analysis is completed through the deep neural network. If the accident is detected, information is sent to the nearby control room. a convolutional neural networks (CNN)-based accident detection system. This model has two classes, named accident and non-accident. Live video of a camera is continuously transferred to the server where CNN is used to classify the video into two categories: accident and non-accident. The accuracy of the accident detection model is 95%. This system only detects accidents and does not consider the rescue operation The pre-trained Yolo v3 model was used for accident identification. The Yolo model is trained in a different situation to detect accidents like rainy, foggy, low-visibility, etc. The image augmentation techniques to increase the dataset size. The model’s input is the live video from the camera, which is configured on the road.

This module uses the capabilities of IoT and AI to detect an accident and its intensity. Various sensors are used to collect accident-related information such as location, force, etc., and identify accidents. In order to minimize the false alarm rate, deep learning techniques are used.

Initially, the accident-related information is sent through the IoT kit and send it to the server for processing. Instead of using the cloud or server for processing the collected data, this approach uses the fog computing to process the information locally. This method employs a machine learning methodology to detect accidents, which may not provide acceptable accuracy due to the complexity of the input video data.

major limitation of this approach is that the camera is located on the dashboard, which may be damaged during the accident. This approach relies only on the camera and does not use any IoT module, which may increase the false positive rate.

- Sensors:

major limitation of this approach is that the camera is located on the dashboard, which may be damaged during the accident. This approach relies only on the camera and does not use any IoT module, which may increase the false positive rate.

- Proximity Sensors:

- Proximity Sensors: AI-driven proximity sensors can detect objects or obstacles in the vehicle’s vicinity, helping in parking and avoiding collisions.

A proximity sensor is a component that is designed to detect the absence or presence of an object without the need for physical contact. They are non-contact devices, highly useful for working with delicate or unstable objects that could be damaged by contact with other types of sensors.

This non-contact operation also means that most types of proximity sensors (excluding types such as magnetic proximity sensors) have a prolonged lifespan. This is because they have semiconductor outputs, meaning that no contacts are used for output.

Proximity sensors are designed to provide a high-speed response (the interval between the point when the object triggers the sensor and the point when the output activates). Different types use varying sensing technologies but they all have the same purpose.

Proximity sensors play a crucial role in various applications across different industries. Here are some reasons why proximity sensors are important:

- Non-Contact Sensing: Proximity sensors allow for non-contact sensing, which means they can detect objects without physically touching them. This feature is particularly useful in applications where contact could damage the object or where cleanliness is essential.

- Accurate Positioning: Proximity sensors provide accurate positioning information, allowing precise control of machinery and equipment. They can detect the exact position of an object, ensuring precise movements and preventing collisions.

- Reliable Object Detection: Proximity sensors offer reliable object detection, even in harsh environments. They can detect objects regardless of their material or surface properties, making them suitable for a wide range of applications.

- Fast Response Time: Proximity sensors have a fast response time, enabling quick detection and response to changes in the proximity of objects. This feature is crucial in applications where real-time monitoring and control are required.

- Versatile Applications: Proximity sensors find applications in various industries, including manufacturing, automotive, robotics, and security systems. They are used for object detection, distance measurement, position sensing, and more.

The sensor works by emitting an electromagnetic field or a beam of electromagnetic radiation and looks for changes in the field. The sensor then provides an output to a connected device.

Operating Principles

Detection Principle of Inductive Proximity Sensors:

Inductive Proximity Sensors detect magnetic loss due to eddy currents that are generated on a conductive surface by an external magnetic field. An AC magnetic field is generated on the detection coil, and changes in the impedance due to eddy currents generated on a metallic object are detected.

Other methods include Aluminum-detecting Sensors, which detect the phase component of the frequency, and All-metal Sensors, which use a working coil to detect only the changed component of the impedance. There are also Pulse-response Sensors, which generate an eddy current in pulses and detect the time change in the eddy current with the voltage induced in the coil.

(Qualitative Explanation)

The sensing object and Sensor form what appears to be a transformer-like relationship.

The transformer-like coupling condition is replaced by impedance changes due to eddy-current losses.

The impedance changes can be viewed as changes in the resistance that is inserted in series with the sensing object.

(This does not actually occur, but thinking of it this way makes it easier to understand qualitatively.)

Detection Principle of Capacitive Proximity Sensors

Capacitive Proximity Sensors detect changes in the capacitance between the sensing object and the Sensor. The amount of capacitance varies depending on the size and distance of the sensing object. An ordinary Capacitive Proximity Sensor is similar to a capacitor with two parallel plates, where the capacity of the two plates is detected. One of the plates is the object being measured (with an imaginary ground), and the other is the Sensor’s sensing surface. The changes in the capacity generated between these two poles are detected.

The objects that can be detected depend on their dielectric constant, but they include resin and water in addition to metals.

Detection Principle of Magnetic Proximity Sensors:

The reed end of the switch is operated by a magnet. When the reed switch is turned ON, the Sensor is turned ON.

2.Environment Monitoring:

Sensors equipped with AI can monitor road conditions, weather, and traffic patterns to provide real-time information and enhance driving safety. AIoT enables the collection and analysis of large amounts of data from various sensors and sources, allowing for faster and more accurate detection of accidents.

Components:

- Sensors:

-Accelerometers and Gyroscopes: These sensors can detect sudden changes in acceleration or orientation, which might indicate an accident.

-GPS Module: To track the location of the device.

-Temperature and Humidity Sensors: To monitor environmental conditions.

- AI Algorithms:

-Machine Learning Models: Train models to recognize patterns associated with accidents. You might use supervised learning with labeled datasets to train the models.

-Edge Computing: Perform some processing on the edge devices to reduce latency and send only relevant information to the cloud.

- IoT Devices:

-Microcontrollers (e.g., Arduino, Raspberry Pi): These can be used as the edge devices to collect data from sensors, process it, and send relevant information to the cloud.

-Connectivity Modules (e.g., Wi-Fi, Bluetooth, GSM): Enable communication between the edge devices and the central server/cloud.

- Cloud Platform:

-Cloud Server (e.g., AWS, Azure, Google Cloud): Collect and process data from the edge devices. Host the AI models and manage the overall system.

-Database: Store historical data for analysis and reporting.

- Communication Protocols:

-MQTT, CoAP: Lightweight protocols suitable for IoT communication.

-HTTP/HTTPS: For communication with the cloud server.

-Data Collection

Considerations:

-Power Consumption: Ensure that the devices have sufficient power for continuous operation.

-Scalability: Design the system to handle an increasing number of devices.

-Security: Implement robust security measures to protect data transmission and storage.

-Regulatory Compliance: Ensure that the system complies with relevant regulations and standards.

- Techniques to Reduce Risk of Collision:

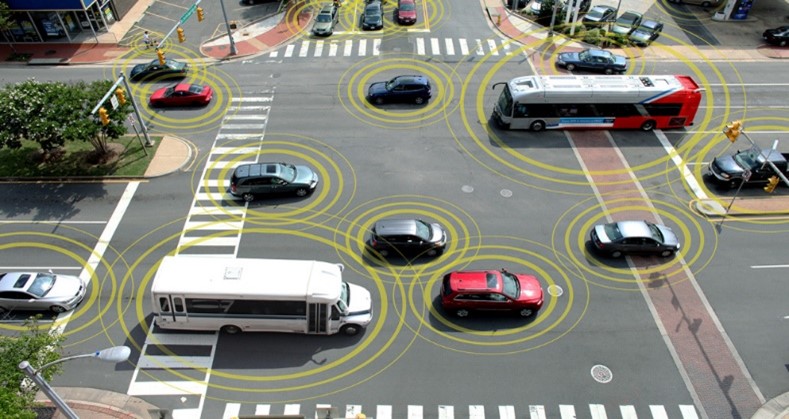

V2V (Vehicle-to-Vehicle) Communication: AI-enabled communication between vehicles can share real-time data about their positions and movements, allowing for collaborative collision avoidance.

V2V could capture and transmit these inputs, among others. By the time V2V arrives in cars, some may be stripped out for the sake of simplicity or cost-cutting.

-Vehicle speed

-Vehicle position and heading (direction of travel)

-On or off the throttle (accelerating, driving, slowing)

-Brakes on, anti-lock braking

-Lane changes

-Stability control, traction control engaged

-Windshield wipers on, defroster on, headlamps on in daytime (raining, snowing)

-Brakes on, anti-lock braking

-Gear position (a car in reverse might be backing out of a parking stall)

As automotive technology continues to move towards fully autonomous vehicles, V2V is expected to follow a similar evolutionary path. Initially, the systems will provide a warning to the driver of the vehicle, but once systems are more mature, they may be able to control the vehicle by braking or steering around obstacles.

Obstacle detection is one of the primary areas for vehicle vision systems and, although great advances have been made, there are limitations. For example, a vision system would struggle to detect a hazard such as a broken-down vehicle or queue of traffic around a bend, but a V2V implementation would not be constrained by line-of-sight and could reliably detect such situations. In reality, the inputs from vision systems and V2V are likely to be merged in ‘sensor fusion’ for confirmation and to eliminate false notifications.

V2V would form a mesh network and dedicated short-range communications (DSRC) is one technology being proposed by organisations such as the FCC and ISO. This is similar to WiFi, as it operates at 5.9GHz and has a range of approximately 300 metres – which equates to around 10 seconds on a highway. However, with up to 10 ‘hops’ on the mesh the ‘visibility’ of the V2V system extends to around a mile, which gives plenty of warning on busy roads where the mesh can extend this far.

Early implementations of V2V are likely to be a ‘warning only’ system where a visual indicator or audible warning (or both) are given to the driver. These will rapidly increase in sophistication and may indicate the direction and proximity of the issue as well as its nature. With systems such as automated emergency braking (AEB) becoming more prevalent, it won’t be long before vehicles are able to bring themselves to a safe halt based upon an alert from the V2V system.

The feature of connected vehicleTechnology

What will connected car technology look like in the future? For consumers, it may be a self-driving car that gets them safely to their destination without a driver. For businesses, it may mean driverless trucks but concerns over cyber security attacks.

Concerns over the safety and privacy of connected car technologies are currently being debated and worked through on multiple levels – from private tech companies to government agencies. The ever changing needs of compliance raise questions, along with insurance and liability concerns in the case of accidents.

By integrating AIoT into these aspects, we can significantly enhance safety measures and reduce the risk of accidents across different scenarios.

Thanks I have recently been looking for info about this subject for a while and yours is the greatest I have discovered so far However what in regards to the bottom line Are you certain in regards to the supply